KernelCI Community Survey Report

We are thrilled to share with you the results of our first KernelCI Community Survey. It has been a very interesting experience, with just under 100 responses from people who all provided quality feedback. We are really thankful for every single one of them. It was also a great way to engage more widely with the community. The full results are available for everyone to see in a shared spreadsheet. Individual comments are not shared publicly although they are very valuable and will be taken into account.

In case you missed it and would have liked to respond to the survey, we are opening it again until the end of August using the same Google Form. If you have any other comments or questions, please feel free to send them to the kernelci@lists.linux.dev mailing list or get in touch on the IRC #kernelci libera.chat channel.

Main Takeaway: Test the Patches

A few things came out very clearly from the survey and we are taking them into account while setting the next priorities for the project. The most important one by far is the need for testing individual patches sent on mailing lists. It has been on our radar for a while, but this survey shows how it would really take KernelCI to the next level: targeted reporting, shorter turnaround, early detection of issues. The existing automated bisection has proven how valuable it is in particular for linux-next and stable-rc to be able to report issues directly to the commit authors and relevant maintainers, so we should keep improving on that too.

Profiles of Respondents

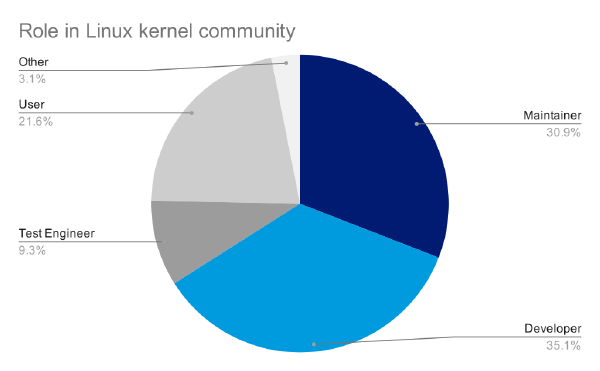

We can see two main categories of people: one third of maintainers and one third of developers. It’s great to have this level of responses from maintainers as they play a key role in the kernel development workflow and can lead the way to use more automated testing.

Then the last third is made up of many small categories such as regular users, managers, researchers and test engineers. They all play an important role in the community, it was very useful to find out more about what KernelCI meant to them. However, the overall low number of test engineers given the audience we’ve reached probably means that automated test systems aren't being developed as much as they could, and that maintainers or developers rely mostly on their own testing.

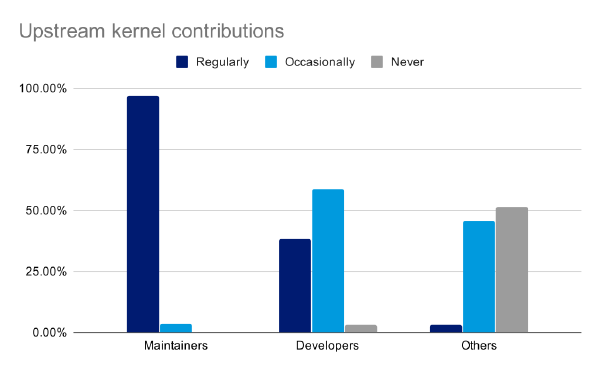

What is also interesting to see is the high degree of contribution to the upstream kernel, as even half of non-developers do so at least occasionally. This probably means that about 80% of the total number of respondents have contributed at least once while 50% contribute very regularly.

Reporting

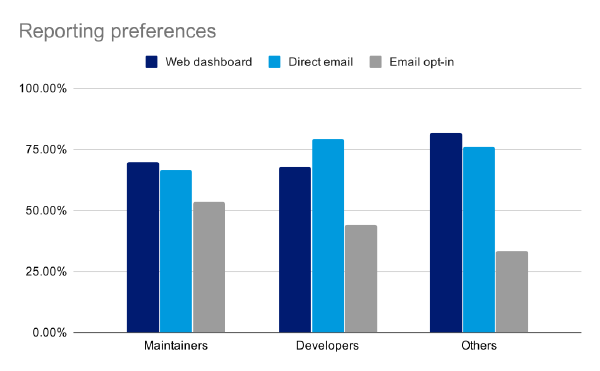

On the reporting front, what clearly stands out is that emails sent directly to the relevant people is the most effective way of reporting regressions. This is currently done using automated bisections, to isolate individual commits. To report issues earlier, we would need to add support for testing patches sent to mailing lists before they are applied to Git branches.

About 70% of all respondents are interested in using a web dashboard, which means it is also an area worth improving. However, providing ways to work solely using emails and command line tools is also an important requirement as a number of maintainers don’t want to have to deal with a web dashboard at all. This is probably where the “opt-in” scheme for larger email reports with summaries of the full test coverage becomes more useful.

Response Time

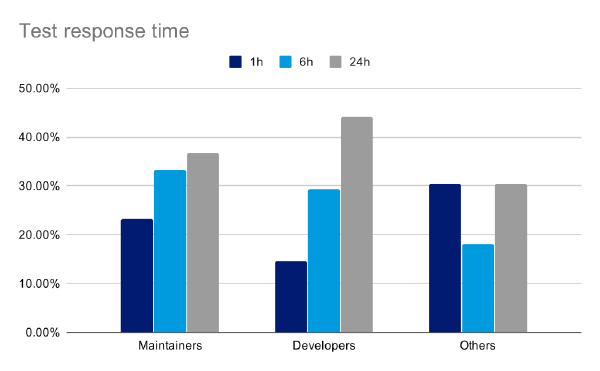

Automated testing comes in many shapes, with some tests being quick and others very long. A key piece of information is that 24h appears to be an acceptable timeframe for providing comprehensive test results. This means automated bisections still have an important role to play even if they sometimes take a few hours to complete, and enabling long-running test suites can add a lot of value. On the other hand, having quick results is also needed for some use-cases. We should follow up and clarify what these are. It can potentially involve automatically running a subset of tests based on the nature of the code changes. This is a crucial area where to focus our efforts, to bring testing more into the development workflow rather than something that happens after the fact.

Automated Testing

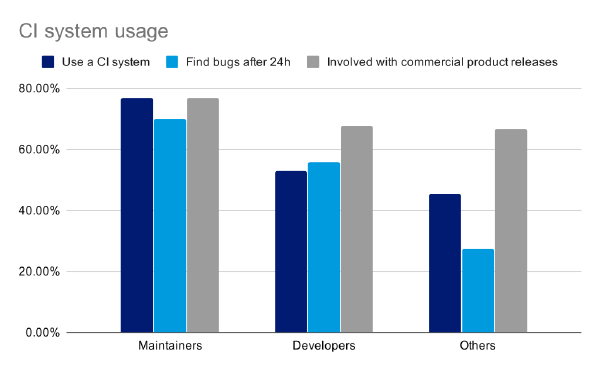

It seems that maintainers are doing more automated testing and find more bugs than developers. This tends to show that testing occurs quite late in the development workflow and developers don't get much feedback about regressions until their patches have been applied by maintainers. Testing patches sent to mailing lists is typically something that would help a lot here as well. Maybe some maintainers could ultimately require that developers get their patches validated using an automated system before being applied, if there is a convenient way of making this fit into the current email-based development workflow.

The fact that a clear majority of both maintainers and developers often find bugs later than 24h after a new kernel version was made available shows there is probably a lot of room for improvement. Some issues may be hard to find and actually require days of testing, but if issues regularly take that time to get discovered it’s more likely to be due to the lack of early test coverage and feedback.

Next steps

We already have a clear set of objectives in the short term, to wrap up before Linux Plumbers Conference at the end of August some of the important things we’ve been working on during the past year. After that, testing patches should clearly appear at the top of the priority list to report issues by email directly and early. The second priority should be about improving test coverage with short results for patches to provide early feedback in the development workflow. Longer tests should also be run, such as LTP, using the current monitoring of Git branches (next, mainline, stable…). The third priority seems to be about improving our web dashboard, starting with refining the requirements in this area. This is often complementary to email reporting although we know it should remain possible to use KernelCI using only emails.

Overall, this has been a very useful exercise and we’re really thankful for all of you who responded to it. We should consider having another survey when we’ve made more progress, probably around this time next year.